An NPS survey is a specialized questionnaire designed to include the NPS rating question and usually associated follow-up questions. In the following sections, we will discuss how these questionnaires are designed, and how surveys based on these questionnaires are planned and executed.

NPS Questionnaires

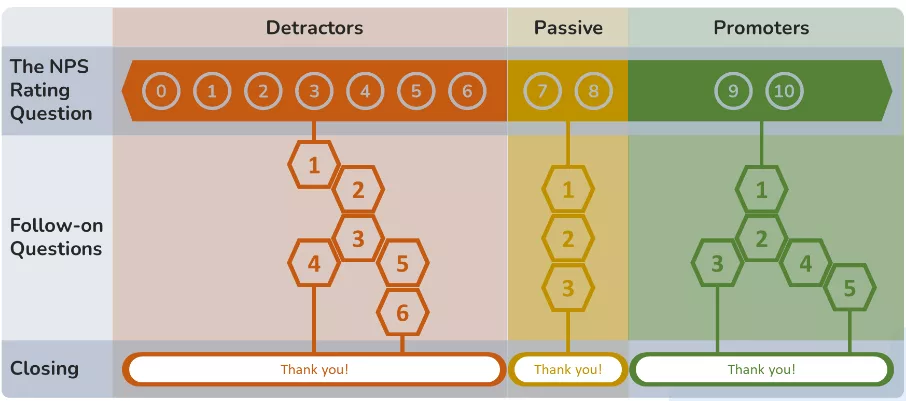

An NPS questionnaire consists of three sections: The NPS rating question itself, the follow-up question(s), and the closing/thank you section. As we’ve learned, the NPS rating question uses a 0 to 10 scale, with 0-6 responses classified as Detractors, 7-8 as Passive, and 9-10 as Promoters.

While a survey for NPS could solely include the NPS rating question, this would not provide insights into the drivers of the score. Follow-up questions help identify these drivers, which form the basis for actions to maintain Promoters and improve your standing with Passives and Detractors. Promoter feedback offers insights into what you’re doing right and how to increase customer referrals, while Detractor feedback provides opportunities to retain them as customers.

It’s advisable for follow-up questions to be tailored to different NPS rating question outcomes. The questions you ask a Passive responder should naturally differ from those posed to a Detractor.

Follow-up questions do not have to be linearly sequenced; conditional branching can be employed to determine the next question based on the current response.

Let’s dive deeper into these sections.

The NPS rating question

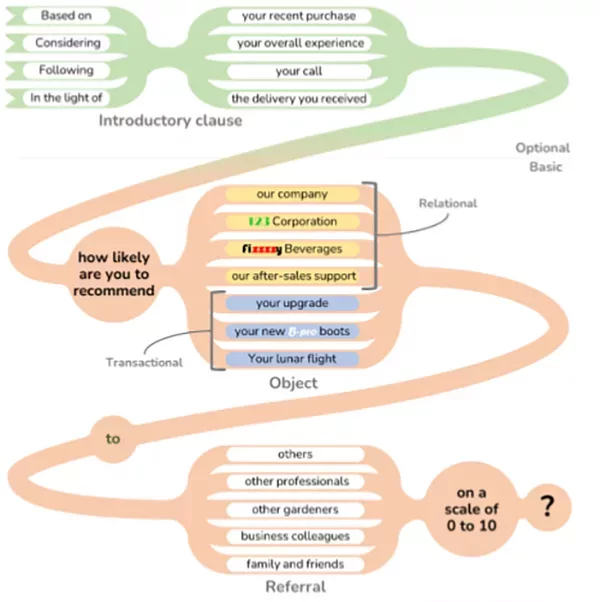

In the beginning, we introduced the NPS rating question as “How likely are you to recommend our company to others on a scale of 0 to 10?” Now, let’s explore the structure of this question and how it can be adapted for different purposes.

Firstly, the term “company” in the basic question can be replaced with other organizational objects such as “business,” “department,” the actual name of a business/department, or a brand name. When the question is aimed at gaining overall insight into the business-customer relationship, it’s called a relational NPS rating question. You can also engage the customer regarding a particular product they recently purchased, service they received, or any other part of the customer experience. In this case, the rating question is termed transactional.

Data gathered through relational NPS rating questions are particularly useful for benchmarking against internal or external NPS data. These questions are typically part of periodic surveys (such as quarterly or annually) because they aim to understand the customer’s overall view of the relationship. It’s best to avoid executing surveys during or immediately after specific customer experiences to prevent these experiences from biasing the overall customer perspective on your business.

Transactional questions allow metrics to be collected at various points in the customer lifecycle for departmental action and optimization. Transactional surveys are typically triggered by customer events such as post-purchase, post-call center contact, new customer registration, product changes, service completion, and others.

Another aspect to consider is the referral part of the question. If you want to query the customer’s likelihood of referring your organization or product to a specific group of people, you can replace “others” with a more specific designator.

In addition to changing the object and referral parts of the question, an introductory clause may optionally be placed at the start of the question, such as “following your recent purchase” or “considering your overall purchase.” This can elicit a customer response focused on a particular touchpoint of the customer journey as opposed to another or the overall experience, or vice versa.

With these minor modifications, you can adapt the NPS Question to address a wide variety of data collection requirements, from assessing departmental customer relationship performance to evaluating the reception of product changes and gauging overall brand loyalty.

Follow-on questions

The NPS rating question may be followed by one or more questions. Each question should consider:

- Purpose of the question (information sought)

- Object of the question (same as the object of the NPS rating question)

- Audience of the question (general public or specialists? Detractors, Passives, or Promoters?)

- Processing of results (how elicited responses will be used/analyzed)

- Conditional branching (if any) leading to and from the question

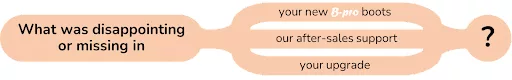

Let’s start with the following basic, generic follow-on question that references the object of the NPS rating question. It’s not conditional on the rating outcome and addresses all responders, whether they are Detractors, Passives, or Promoters.

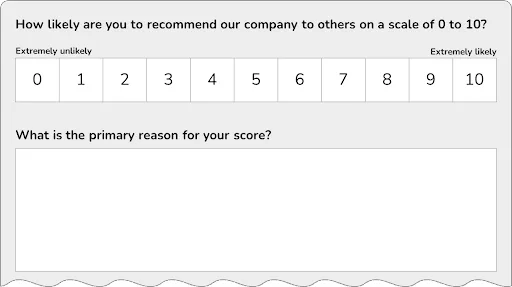

We can imagine two ways of prompting for a response to this question: We may prompt for a free text answer, in which case our survey might look something like the following:

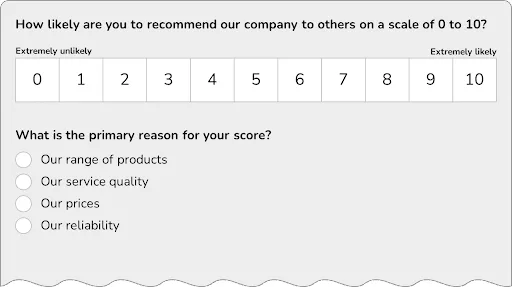

This kind of answer is referred to as open-ended. Alternatively, we may present responders with a menu of pre-determined options. In this case, our survey might resemble the following:

This type of response is referred to as closed-ended. Different types of closed-ended responses are available. The depicted type is single-choice selection, allowing one item to be selected. Other closed-ended response types include dichotomous (True/False or Yes/No), multiple-choice selection (allowing more than one item to be selected), rating scales (like the NPS rating question itself), and ranking order (asking responders to order presented items according to preference).

The important distinction between open and closed-ended responses lies in how they will be processed and analyzed. Open-ended responses are exploratory and qualitative, potentially providing unexpected insights into customer opinions. However, automated processing of these responses is challenging.

Open-ended responses work best for small survey populations, especially those consisting of qualified responders (e.g., purchasing managers of wholesalers in a B2B scenario).

Even when closed-ended responses are preferred, open-ended response fields may be provided as an outlet for respondents who need to share additional concerns or feel that the options provided are inadequate (e.g., an “Other” option followed by a “Please explain” text field).

Regarding the structure of the question itself, when there are multiple follow-on questions, it can be beneficial to refer to the object of the question to increase the likelihood that the responder stays on target. The question can be re-phrased according to the audience. The “recommend” version can be posed to a Promoter, and the “not recommend” version to a Detractor. Both versions of the question could be posed to Passives.

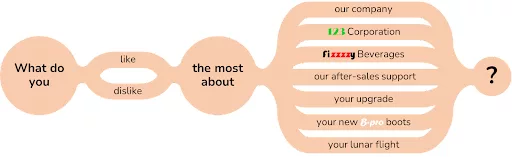

Another form may be used to identify the primary aspects/features of the object of the question that are most liked/disliked by the customer. These aspects/features become primary targets for protection, enhancement, and promotion (in the “like” case) or immediate remedial action (in the “dislike” case).

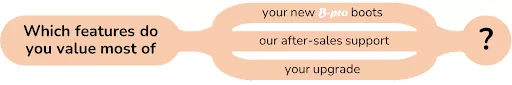

To prioritize feature roadmaps, product managers might prefer a more concise question of the following form with closed-ended responses of multiple-choice or ranking-order type:

For Detractors, a similarly structured question might be of the following form:

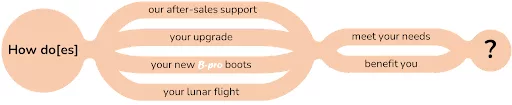

A subtle difference in the question can shift the emphasis to gaining customer insight for product/service improvement and understanding if your products and services actually deliver the particular value you envisioned.

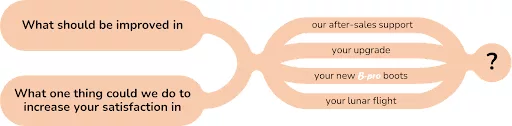

Questions with the following structure aid in uncovering improvements that lead to enhanced customer experience. There are always improvement opportunities, making this question type suitable for all audiences.

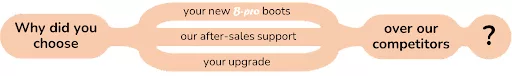

Marketing may be interested in advantages vis-à-vis the competition, which may be investigated through a question with the following structure:

The last follow-on question may be regarding follow-on actions. Detractors may be asked if they are willing to be contacted, and Promoters may be asked for permission to share a testimonial.

Closing

To appreciate the time and effort of the responder, a “Thank You” should be placed after follow-on questions. In addition, call-to-action elements in the form of buttons, forms, links, and other components can be used to offer promotions, product information, subscriptions, and other contextually relevant material.

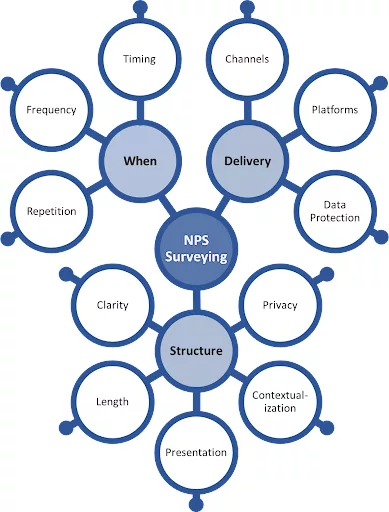

Survey design

In this section we will discuss how the NPS questionnaire is used to constitute a successful NPS survey. Let’s begin by a definition:

A good NPS survey design poses a correctly formulated NPS rating question together with a set of follow-on questions suitable for exposing drivers of the respondents NPS rating, structured, worded and delivered in a timely manner that successfully elicits correct and unbiased responses from the maximum number of customers surveyed.

As a starting point It is worthwhile considering why we need to maximize responses. This is because many of design attributes we will be reviewing have this particular goal.

We’ve seen the potential effects of bias in NPS calculation and know that if we are confident that the response size is representative of the customer population, then we are not worried about non-responders. If only a 10% portion of those surveyed respond to the NPS questionnaire, but the whole composition of our customers is represented within this portion at the same ratio as their ratio in the overall customer population, then we have no bias and no issue.

The problem is that we can never be truly confident of this. Our fallback position is to reduce the portion of non-responders as much as possible, thereby eliminating potential sources of bias. This is the reason that good surveys aim to maximize Survey Response Rates (SRR). In addition, as we will discuss later, individual customer feedback is a very useful tool that can be used to great effect.

With this in hand, let’s start unpacking aspects of NPS survey design.

Structuring survey content

Thoughtfully designed questionnaires play a crucial role in preventing customers from abandoning surveys and providing erroneous responses. This is an extensive topic, but here are some guiding principles.

Contextualization: Contextualizing questionnaires to align with the specific characteristics, experiences, and needs of the surveyed customers is an effective technique to prevent misunderstandings and ensure meaningful responses. Contextualization works through the formulation of templates for NPS rating and follow-on questions as discussed previously.

Strategies for contextualization include:

- Personalization of questionnaires by incorporating the customer’s identity and related information, creating a unique survey that fosters engagement and conveys the customer’s value.

- Ensuring that questions are highly relevant to the customer and the specific stage on the customer journey by carefully screening questions for applicability.

- Implementing conditional logic that adapts the flow of questions based on the responses to preceding questions, ensuring a tailored experience for each customer.

- Presenting questions in the customer’s preferred natural language and utilizing a linguistic style and tone that resonates with the individual, making the survey more relatable.

Clarity: Both the NPS rating question and follow-on questions should be clear and concise, avoiding the use of technical terms, abbreviations, and acronyms. Using simple, straightforward language ensures that customers can easily understand and respond to the questions. Caution should be exercised when surveying populations encompassing multiple languages. Frequently, translated versions of the questionnaires do not possess the same expressive qualities as the original, which may lead to response bias amongst language groups.

Length: Excessively lengthy surveys can lead to respondent fatigue and increased chances of survey abandonment. To combat this, questionnaires should be designed to require minimal effort and include only the questions necessary for data collection purposes. Length should be kept to the minimum required to achieve survey objectives.

Privacy: To ensure the privacy of respondents and minimize survey abandonment, make non-essential and potentially sensitive questions non-mandatory. Providing options such as “Prefer not to answer” for these questions can enhance respondent comfort and participation.

Presentation: Taking into account all the communication channels over which the survey will be delivered (discussed below), audio/visual elements such as color themes, layout, font selection and sizing, pictorial guides, chimes and others should be pre-configured and employed uniformly to express brand identity and provide cognitive appeal globally and/or for particular customer groups.

Determining when to survey

Deciding when to send out NPS surveys is a critical aspect of successful survey execution. It involves addressing three key elements: Timing, Repetition, and Frequency.

Timing revolves around determining the most appropriate moment for customers to receive the questionnaire. For instance, sending an NPS survey immediately after a purchase may risk receiving responses based on first impressions rather than a deeper understanding of the product’s features. Conversely, for a service or experience, conducting the survey immediately afterward is often the most suitable time. However, waiting too long carries its own risks, as customers might have forgotten their initial thoughts. When dealing with products and services linked to specific calendar events, it’s essential to consider the timing of surveys concerning events like pre-purchase, during, and post-purchase. Surveys that arrive at inconvenient times are more likely to be ignored, affecting Survey Response Rate (SRR) and potentially introducing bias into the results.

Repetition refers to the number of attempts made to elicit customer responses for survey completion. It involves determining how many efforts will be made to prompt customers to fill out a survey or complete a partially filled-out survey.

Survey frequency represents the number of NPS surveys conducted within a specific time frame. Transactional NPS surveys focusing on particular waypoints of the customer journey generally expect to receive a single response. However, for certain products and services, like subscriptions or long-running contracts, multiple surveys may be conducted over time. More generally, relational NPS surveys that aim to gauge overall perceptions of a company or brand are periodically distributed. The frequency of these surveys should be aligned with a business’s data collection requirements but must be carefully balanced to avoid overwhelming customers with excessive survey requests.

Delivering and presenting the survey

We now turn our attention to ensuring that the NPS survey reaches its intended recipients and is accessible to them in a convenient set-up.

An important consideration is determining a suitable survey channel. Email, mail, telephone calls web applications and other channels may be the preferred medium for different customer groups. Having knowledge of customer preferences and the ability to deliver the survey over multiple channels will increase the likelihood of customers being aware of and responding to the survey.

In addition to the delivery channel, the device and software platform on which the survey will be responded to must be considered. If you employ a particular technology to present the NPS questionnaire interactively, you must be confident that your customers have suitable browsers that support. If your customers are just as likely to use smartphones or tablets as a computer, then the presentation of the survey should adapt to the limitations of all these devices.

Email delivery requires particular attention. Recipients who are most likely inundated with emails and irrelevant content will be conditioned to discard mails if they perceive them to be general marketing related material. Therefore customizing and personalizing the subject line of the email delivering the NPS survey is important to identify the email as directly relevant to the recipient.

Concerns about privacy and the protection and use of data may deter some respondents. Such concerns typically differ between demographic groups (B2C) and industries (B2B), and may be a source of bias in the survey results. These concerns must be addressed by clearly communicating with surveyed customers how the data will be made use of and how it will be protected.

What about incentives?

Incentives are sometimes suggested as a form of motivation to elicit responses from participants, in the form of discounts, access to digital resources, vouchers and similar. The idea is that many customers might not bother to respond without incentives, and that those that do anyway may have stronger motivations or opinions compared to the overall survey population, hence skewing the results. Be warned that such motivations are highly likely to introduce other kinds of bias into the results due to differing responses to the incentives by different segments of the surveyed population. Incentives should only be employed after careful consideration of the potential impacts on the results.

Surveying for B2B vs B2C

NPS surveying for B2B is based on the same principles and employs the same techniques as B2C. However there are differences that need to be carefully thought out in B2B survey design.

In B2B scenarios the customer population is likely much smaller and the importance differential between each customer much larger than B2C scenarios. This increases the importance of reaching and getting a response from most customers and all critical customers.

Typically B2B customers are more insightful regarding their industry and may have unique perspectives of your products within the context of their industry. This increases the importance of particular responses over general averages, and may necessitate uniquely designed actions to be taken in response.

An advantage and drawback of B2B NPS surveying is that purchasing decisions usually involve multiple individuals such as purchasing officers, technical experts as well as medium and senior level managers. The response of each of these individuals to the NPS rating question may differ significantly. This poses challenges to both collecting unbiased data and interpreting it.

To obtain a representative scoring, feedback must be obtained from multiple individuals with different roles representing the same customer. This raises the question of how the result will be counted. As response numbers for each customer may differ, we face the risk of some customers to be over or under represented in the results.

One method to ensure that customers are counted only once is to take the average or weighted average the responses from different roles representing a customer. This can in turn lead to issues regarding fractional values that fall within the boundary between response groups such as 6.6 (Detractor or Passive?) and 8.5 (Passive or Promoter?).

Another method is to count the response received from the highest level decision maker (whilst still analyzing the feedback of respondents in other roles separately).

Alternatively the results from each role may be considered as separate NPS surveys. In this case different scores may be expected from the same customer depending on the role. A managerial role might provide an overall lower score for your product due to pricing considerations, compared to technical experts that value your product for its technical characteristics.

It is important that the method chosen for handling B2B NPS survey data be documented and that the same method be applied across surveys, to ensure consistent and comparable data sets.

All of these approaches rely on varying degrees on being able to establish a model for role relationships relevant to your customer base and your ability to establish and maintain a database of contact role designations as a prerequisite to NPS surveying.